Ever since I first watched Terminator 2 on a VHS in the mid-90s (I wasn't old enough to legally watch it, don't tell the pooolice) I've wondered will I still be alive by the time it's possible for a robot to kill me.

I mean, I don't want to die, most of the time. Sometimes I say I do when I have a hangover because that's what people say these days. But I don't want to die, that said I will have to die at some point, and fighting the machines on Judgement Day would be a pretty decent way to go in all honesty.

But at the age of 26, most of the robots we have are for the most part, completely useless or eerily seductive hotel receptionists in Japan.

So will I still be alive, and of a young enough age to enlist in the Great Robot Fighting Army Of The Future? (GRFAOTF)* In all likelihood, yes I will be.

As it happens, one robot has already killed somebody. Last July at a Volkswagen plant in Frankfurt, Germany a 22-year-old man was helping to put together the stationary robot that grabs and configures auto parts when the machine grabbed and pushed him against a metal plate, the Associated Press reported. He later died from the injuries.

While that's undeniably tragic it's also undeniably a complete freak accident, it wasn't a conscious decision that the AI made. It's a warning though, and we could be within touching distance of robots intentionally going out of their way to fuck us up.

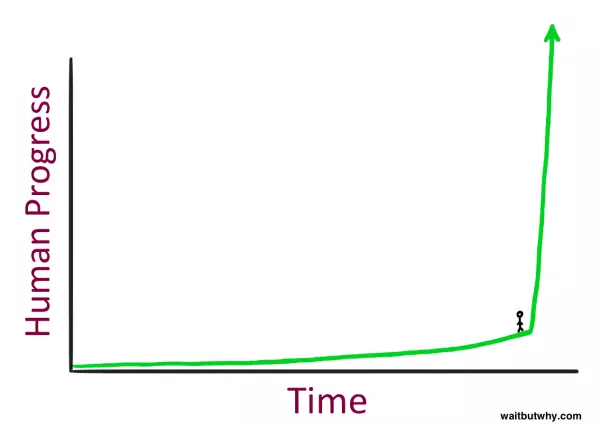

According to this piece expertly crafted by Tim Urban, technology is advancing at a rate like we've never experienced before and it shows no signs of slowing down. By his assumptions, we are less than 50 years from the er, Rise Of The Machines.

There's a chance you're reading this and thinking 'are we really that advanced, things don't feel like they're changing all that much. Like, yeah the self-service till is great and PayPal is cool but what else?'

Advert

Well here's what else...

Imagine picking a man up from the year 1750 and letting him walk around Earth today. Tim wrote about what it would be like to imagine letting that man 'play with my magical wizard rectangle that he could use to capture a real-life image or record a living moment, generate a map with a paranormal moving blue dot that shows him where he is, look at someone's face and chat with them even though they're on the other side of the country, and worlds of other inconceivable sorcery. This is all before you show him the internet or explain things like the International Space Station, the Large Hadron Collider and nuclear weapons.'

That would be a lot for him to take in, up to the point where he might actually die from shock, no joke. But what if 1750 man went back a further 266 years to the year 1,484 and brought him back to 1750, what would he see?

'The 1500 guy would be shocked by a lot of things-but he wouldn't die,' Tim wrote. 'It would be far less of an insane experience for him, because while 1500 and 1750 were very different, they were much less different than 1750 to 2015. The 1500 guy would learn some mind-bending shit about space and physics... But watching everyday life go by in 1750-transportation, communication, etc.-definitely wouldn't make him die.'

That says a lot about what we've achieved over the last quarter century. Technological advancement is a business, and as it happens business is a boomin'.

Futurist Ray Kurzweil believes that the 21st century will achieve 1,000 times the progress of the 20th century.

Source: US Navy unveils firefighting robot by rumblestaff on Rumble

It's that aspect that should tell us AI will arrive at a supreme rate. Wrong, AI is already a huge part of our lives, we just don't notice it much because we associate the term 'AI' with bonkers sci-fi films. Your phone's calculator, Google's troublesome self-driving cars, Siri, these are all AI.

Great, none of those things can kill us. ASI (Artificial Super Intelligence) though, that's a different matter. Oxford philosopher and leading AI thinker Nick Bostrom defines superintelligence as "an intellect that is much smarter than the best human brains in practically every field, including scientific creativity, general wisdom and social skills." Artificial Superintelligence ranges from a computer that's just a little smarter than a human to one that's trillions of times smarter-across the board.

Tim also wrote that Kurzweil believes computers will reach AGI (Advanced General Intelligence, like ASI but not as good) by 2029 and that by 2045, we'll have not only ASI, but a full-blown new world.

And sci-fi movies (Star Wars, Terminator, 2001: A Space Odyssey) aside that's a scary thought and an unpredictable one. It throws up the question 'why would we build something that could hurt us?' One possible answer is, the first people to achieve ASI are exactly that, the first people to do so and there is a massive corridor of uncertainty with regards to being the first to do anything. Also it throws up this basic Darwinian error, is creating something smarter than you ever a good idea?

Basic common sense would tell you no, but common sense is something very rarely applied to technology, we made the nuclear bomb after all.

We will achieve ASI, and soon, and that's great but will we achieve it before we achieve AI safety? There's no guarantee of that, and there's also no guarantee that those that achieve ASI won't want it to be the end of humanity.

Advert

Tim calls this the Jafar Scenario. 'Like when Jafar got ahold of the genie and was all annoying and tyrannical about it. So yeah-what if ISIS has a few genius engineers under its wing working feverishly on AI development? Or what if Iran or North Korea, through a stroke of luck, makes a key tweak to an AI system and it jolts upward to ASI-level over the next year? This would definitely be bad-but in these scenarios, most experts aren't worried about ASI's human creators doing bad things with their ASI, they're worried that the creators will have been rushing to make the first ASI and doing so without careful thought, and would thus lose control of it.'

With regards to this article, I owe a huge amount to Tim Urban, whose supreme amount of research is a truly eye-opening read. So it feels only right to let one of his quotes bookend this piece: 'This may be the most important race in human history. There's a real chance we're finishing up our reign as the King of Earth-and whether we head next to a blissful retirement or straight to the gallows still hangs in the balance.'

Words by Matthew Cooper

Advert

*Acronym needs work

You can read more from Tim here.

Lead Image Credit: TriStar

Featured Image Credit: