Millions of people turn to ChatGPT every single day, to help them with anything from drafting an email to researching a DIY project, and pretty much everything in between.

However, one woman has revealed how the AI chatbot 'nearly killed' her best friend, proving the notion that a pinch of salt should be taken with every answer.

YouTuber Kristi took to Instagram to warn her followers of the dangers that could potentially come with blindly following information from the service, after her friend received advice that could've been fatal.

Kristi, who has nearly half a million followers on her account @rawbeautybykristi, shared the tale of how her pal nearly poisoned herself after ChatGPT reassured her that a poisonous plant in her backyard was actually completely harmless.

Advert

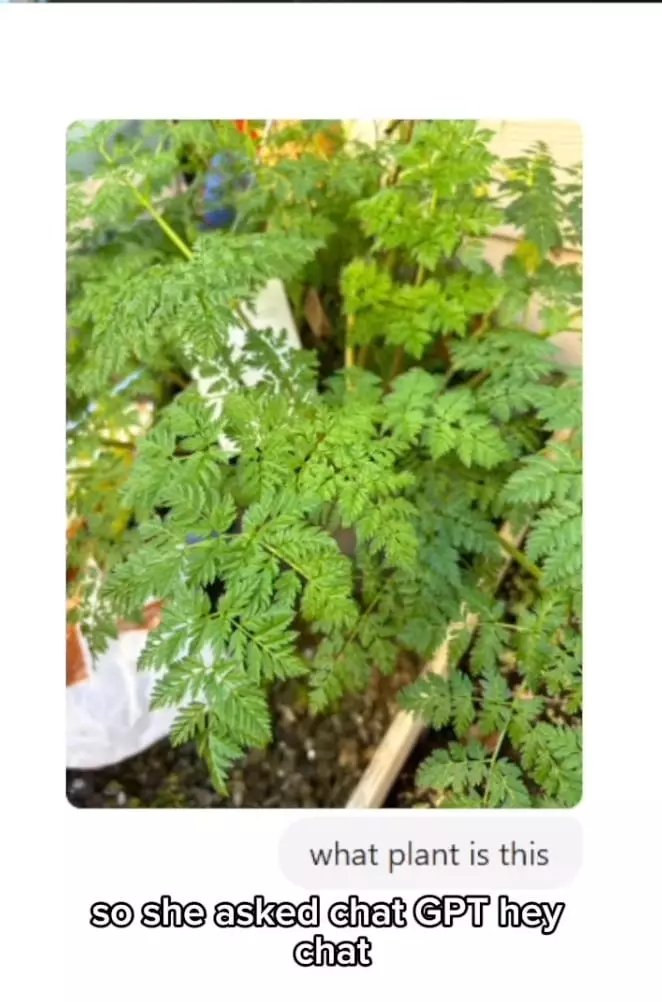

The friend sent the chatbot a photo of the unidentified plant, asking 'what plant is this,' only to be told it looks like carrot foliage. According to screenshots, ChatGPT went on to list several reasons it was 'confident' the plant was carrot foliage, including the 'finely divided and feathery leaves,' which is very 'classic' for carrot tops.

Interestingly, the chatbot went on to list some common lookalikes of carrot foliage, including parsley, cilantro (or coriander for us Brits), Queen Anne's lace and, shock horror, poison hemlock.

When Kristi's friend directly asked if the plant in the photo was poison hemlock, she was met with multiple reassurances it wasn't.

"I don't know if you guys know this, you eat it, you die. You touch it, you can die," Kristi told her followers, before sharing an answer she received on Google, which states that poison hemlock causes 'systemic poisoning' for which there is no antidote.

After sharing another photo with ChatGPT, the friend was reassured once again that the plant was not poison hemlock because it does not show smooth hollow stems with purple blotching, despite the image appearing to show exactly that.

What's even more concerning is the fact the friend was encouraged to incorrectly label the plant as carrot foliage on the assumption it might be in a shared garden in the school where she works.

When Kristi put the same photo into Google lens, another AI platform that allows you to search images, the responses immediately confirm it is in fact poison hemlock. Her friend then put the same images into a different ChatGPT window on her phone and was also immediately told the plant was poisonous.

"She's a grown adult and she knew to ask me beyond what ChatGPT said thank God, because what if she wasn't? They would literally be dead, there is no antidote for this," Kristi said.

"This is a warning to you that ChatGPT and other large language models and any other AI, they are not your friend, they are not to be trusted, they are not helpful, they are awful and they could cause severe harm."

LADbible has approached ChatGPT for comment.

Topics: Instagram, ChatGPT, AI, Artificial Intelligence, Technology, Social Media