She continues to speak up despite online backlash

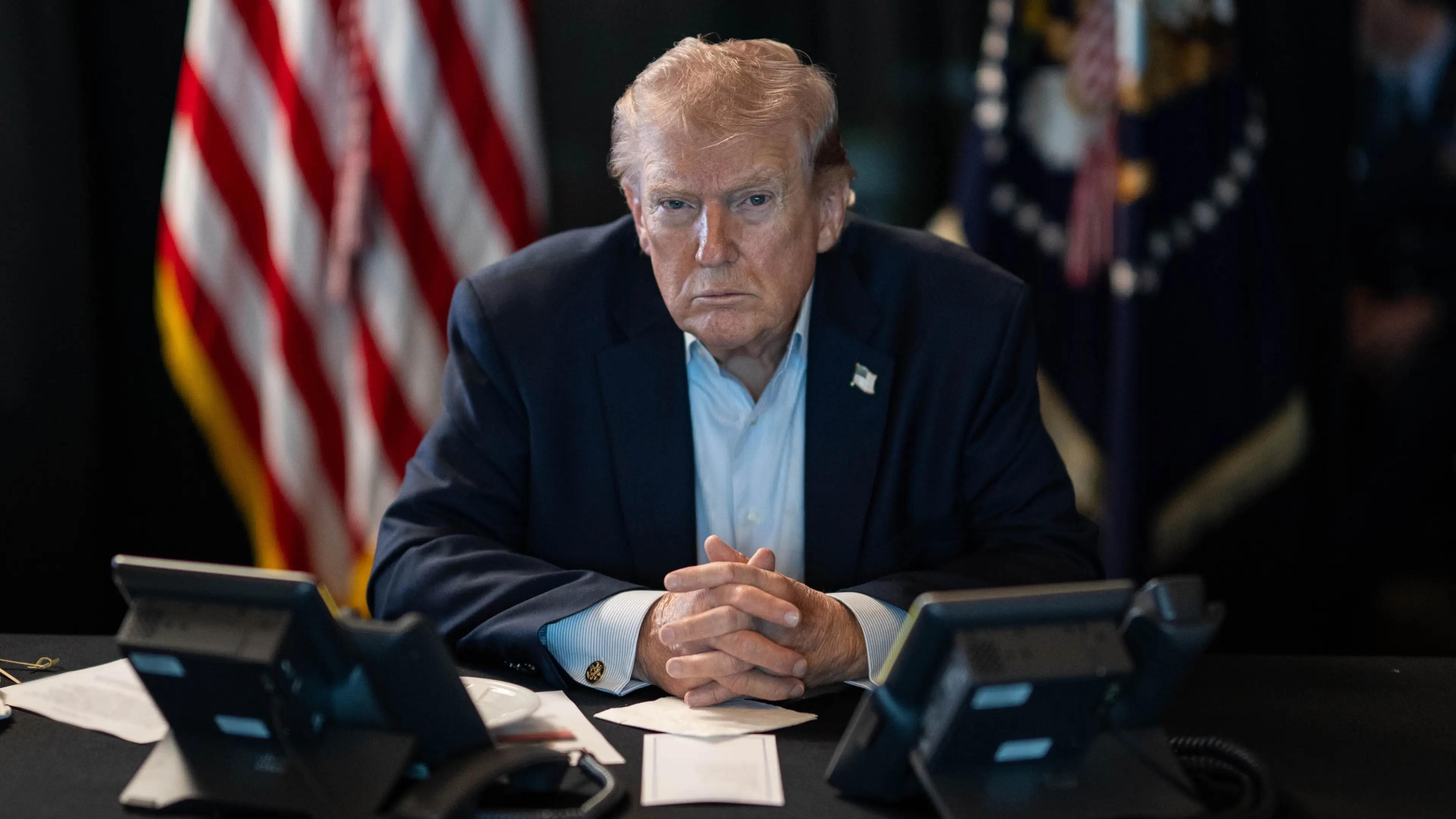

He criticised the US, Israel and Iran for playing 'Russian roulette with the destiny of millions'

The 22-year-old wants to raise awareness of her condition

Igor Komarov, 28, said his abductors had 'already chopped off some of his limbs' in the haunting video

Corey Warren explained that drinking is likely affecting you more than you think

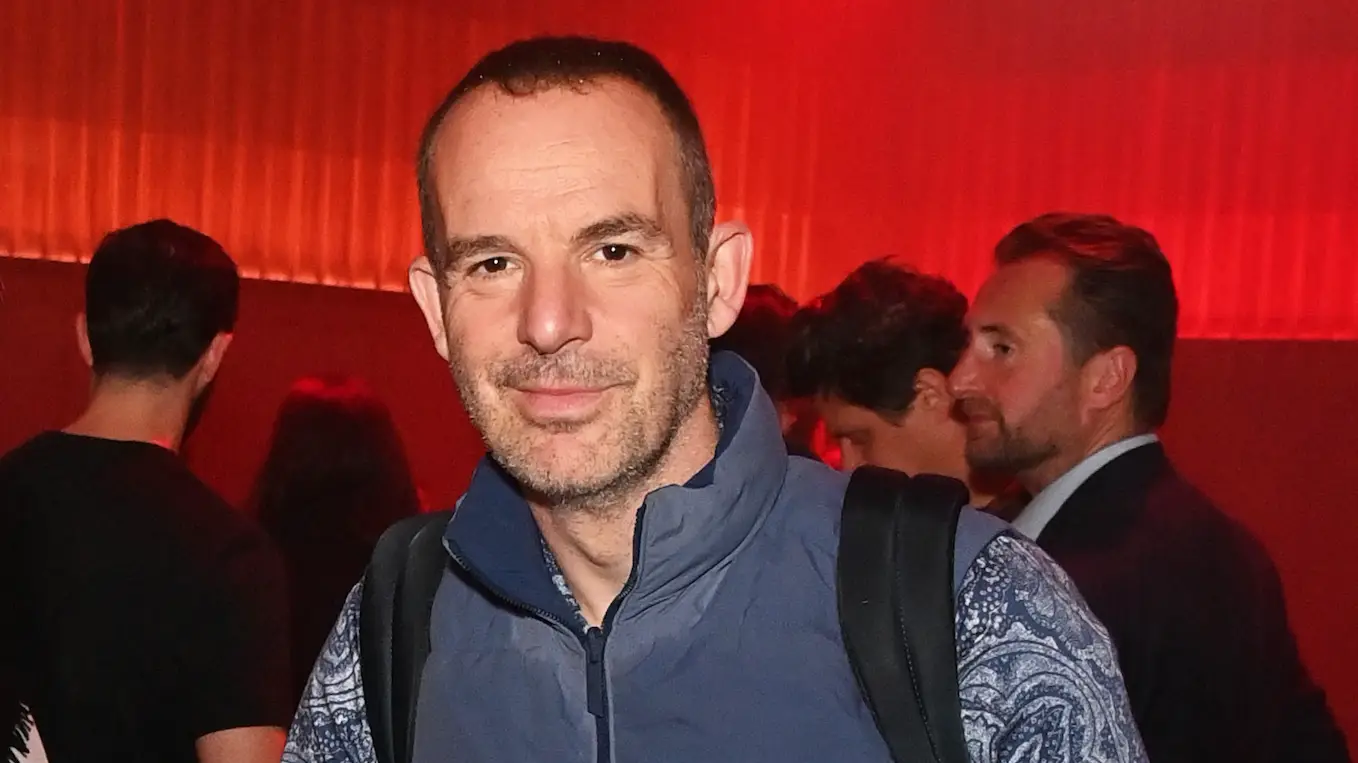

The Money Saving Expert has issued an update on a proposed compensation scheme for car finance mis-selling

The 79-year-old previously suggested he got to Iran's supreme leader before he could get to him

The study could lead to more personalised ADHD treatment

Experts at Resolution Foundation are warning of a 'fresh energy price shock' amid war in the Middle East

Exercise is one of the best things for physical and mental health

Molly Lambert began having intrusive sexual and violent thoughts at 15 years old

Prime Minister Pedro Sánchez has accused Donald Trump of instigating the breakdown of international law

The 37-year-old has pleaded not guilty at Chelmsford Crown Court

Timothy Howells was found at the wheel of his driving school car, in a ditch

People were informed that the sirens will sound 'in the event of a threat or emergency of natural or man-made origin'

It appears Brits are not happy about Donald Trump's decision to launch strikes on Iran

Brits stuck on cruise ship in Dubai say people 'danced round the pool' as drones were fired overhead

Many UK nationals are stuck in the Middle East

A White House official has since responded to the allegations

A chartered flight for Brits looking to escape the conflict is set to leave tonight

Taking creatine, one of the most well-researched supplements, could provide a number of health benefits

A new investigation has found there's a lot going into food and drink without getting the green light

Motorists have been told there's no need to panic buy petrol