Seven other countries will also boycott the ceremony

It comes more than five years after the initial incident

NASA has provided an update on near-Earth asteroid 2024 YR4

It isn't as bad as many might think

Applegate says she was 'disgusted by what came out of my mouth'

New York Democratic Rep. Alexandria Ocasio-Cortez has slammed President Trump

An investigation by Poland-based news outlet Vot Tak has found that Russia is fast-tracking war veterans from hospital wards

There are a lot of people all trying to leave on the same flights

Russian and Belarusian flags will be flown across Lombardy and Northeast Italy

Yvonne Ford, from Barnsley in Yorkshire, died after contracting the fatal virus on holiday in Morocco

Molly Lambert used to question if her loved ones were 'safe' around her

Police are still searching for six suspects

Dr Eric Berg told his 15 million YouTube followers why constantly snacking can be so detrimental to our health

Let’s be real: a pub isn’t simply a building where you grab a pint.

She was sentenced to 19 years behind bars for having her fiancé killed

Several other countries will also not be involved with the opening ceremony tonight (6 March)

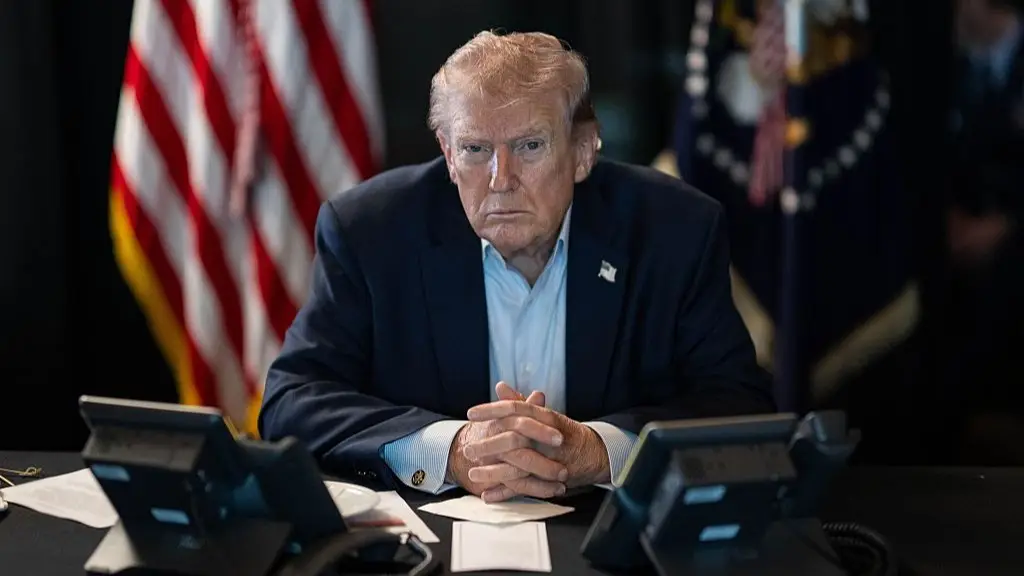

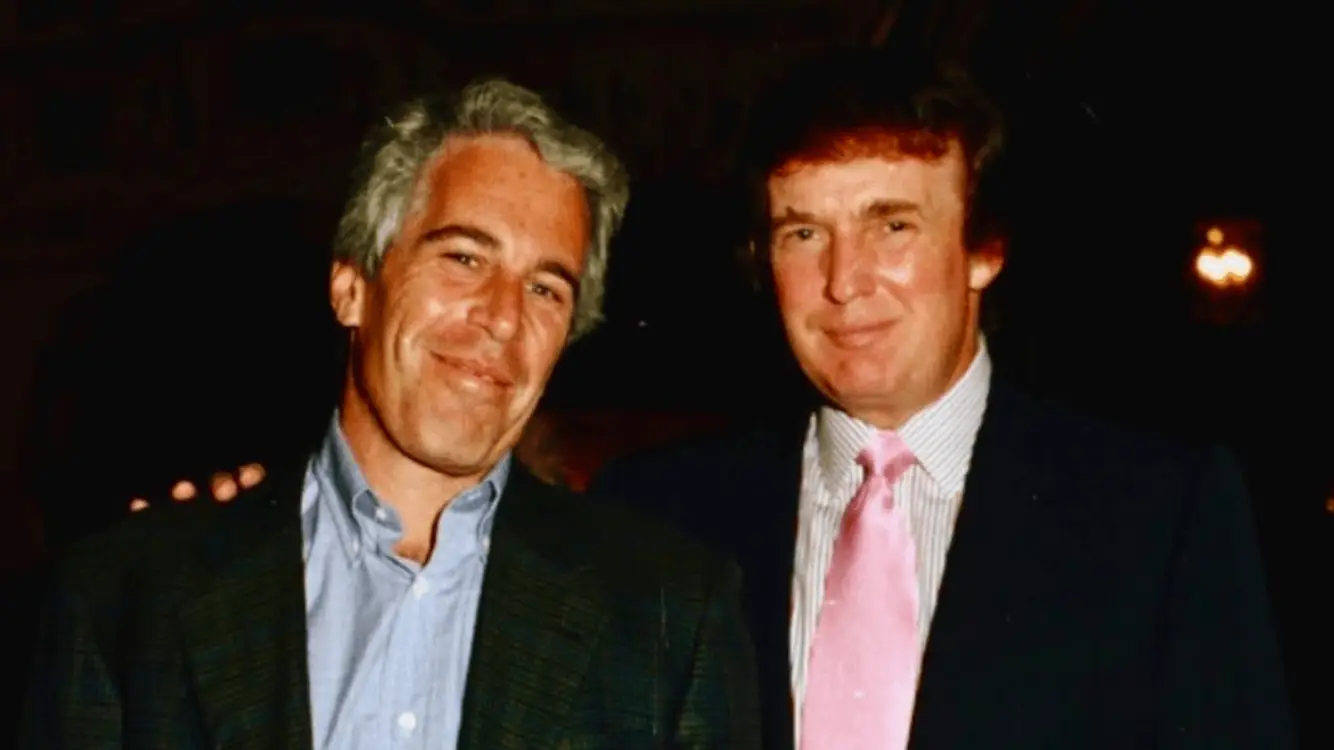

The Department of Justice has explained why the documents weren't included in the original Epstein files release

The 'Toxic' singer has since been released and is expected to appear in court on 4 May

He has been in hospital since the attack

Combine this supplement with your vitamin D in order to maximise benefits and minimise side effects

Google's 'results about you' tool allows you to check how your data shows up in search results

You may have noticed an influx of praise for UAE leaders online

Craig Hamilton-Parker, known as the 'Prophet of Doom', has made another controversial forecast